We all have a voice in our heads. It’s the whispered rehearsal before a big presentation, the silent pep talk before asking for a raise, or the self-critique that reminds us what we wish we hadn’t said. For most of human history, this inner monologue has been locked away, private, and unreachable. But researchers at Stanford University are now tugging at the boundary between private thought and public expression, building brain implants that can decode inner speech—the silent conversations we have with ourselves—and translate them into audible words.

At the heart of this work lies a breakthrough: for the first time, scientists have shown that it’s possible to detect and decode inner monologues without requiring the participant to move or even attempt to speak. Unlike existing brain-computer interfaces (BCIs) that rely on attempted speech—signals produced when someone tries to form words with the lips and tongue—this new approach listens directly to the brain’s motor cortex when a thought is simply formed. In early tests, it reached up to 74% accuracy in translating inner words into speech. For people with paralysis or locked-in syndrome, the implications are enormous: communication could become faster, more natural, and less exhausting.

The Promise of Direct Thought-to-Speech

Traditional speech BCIs require significant effort from participants, who must attempt to move speech muscles even if their bodies can’t respond. That process excludes those with the most severe impairments. The Stanford system, by contrast, taps into silent thought itself. Imagine someone with ALS, unable to move any part of their body other than their eyes, regaining a fluent voice—not by typing with eye movements or blinking out letters, but by simply thinking their words.

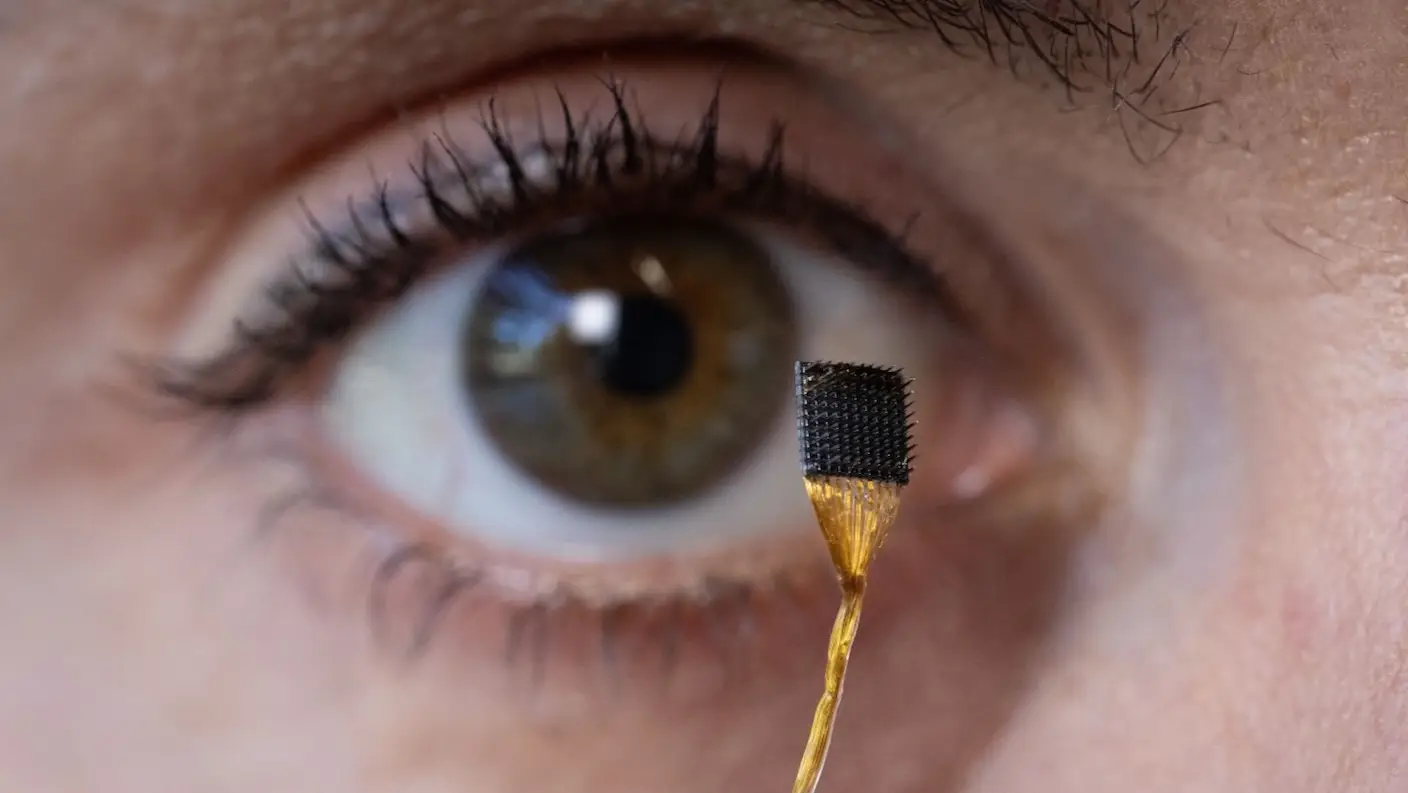

The implant uses a network of electrodes to record tiny electrical signals in the motor cortex. These signals are then fed into an AI system trained to recognize patterns linked with specific sounds and words. Over time, the system learns to distinguish not just words but sentences, enabling real-time decoding of inner dialogue. Early trials have shown that participants can silently “say” words like kite or day and have the system translate them into speech with surprisingly little delay.

Safeguards for Privacy

Of course, the idea of machines listening to your inner voice raises a host of ethical concerns. Who gets to access your private thoughts? How do you keep your mind from broadcasting stray ideas, intrusive memories, or emotions you’d rather keep hidden?

The researchers anticipated this and built in safeguards. One of the most promising is the concept of a “neural password”—a mental trigger word that activates or deactivates the decoder. Think of it as a thought-based lock screen. One participant used the password chittychittybangbang, which the system detected with 99% reliability. Unless that phrase was silently repeated, the implant remained dormant. This ensures that communication remains voluntary and deliberate.

The Road Ahead

The current system is still in its infancy. Accuracy varies, and error rates rise when participants move from small vocabularies to large, open-ended dictionaries. Sentences like “I think it has the best flavor” sometimes emerge as “I think it has the best player.” While occasionally amusing, these mistakes underline the complexity of decoding language from neural signals.

Yet even with its flaws, the technology is moving quickly. With better sensors, refined algorithms, and personalized AI models trained on an individual’s unique brain signals, the day may not be far off when people with total paralysis can once again hold free-flowing, natural conversations. For families who have waited years—or decades—to hear a loved one’s voice again, that future is nothing short of revolutionary.

The implications extend beyond medical applications. As decoding improves, society will have to wrestle with deeper questions: Could inner speech implants ever be used outside of healthcare? What happens if thought-to-speech becomes a mainstream tool, not just for the disabled but for anyone wanting hands-free communication? Will privacy safeguards be strong enough when the stakes rise?

One thing is clear: the boundary between our most private space—the mind—and the outside world is being redrawn in real time. And once thoughts can be translated into words on demand, the way we think about speech, communication, and even identity may never be the same.

Related stories:

- AI Brain Implant Restores Speech to Stroke Patient

- Brain-Computer Interfaces Push Closer to Everyday Use