The strongest materials of the last century were discovered with hammers, furnaces, and patience. The strongest materials of the next century will be discovered with prompts. In labs where lasers etch features thinner than a red blood cell and algorithms hunt Pareto fronts, researchers have now taught artificial intelligence to design a carbon nanolattice that carries the compressive punch of carbon steel while weighing about as much as Styrofoam. That is not a metaphor. It’s a new class of matter—architected by code, born in light, and refined in heat—that could remake aerospace, mobility, construction, sport, and any place where every gram and every Newton matter.

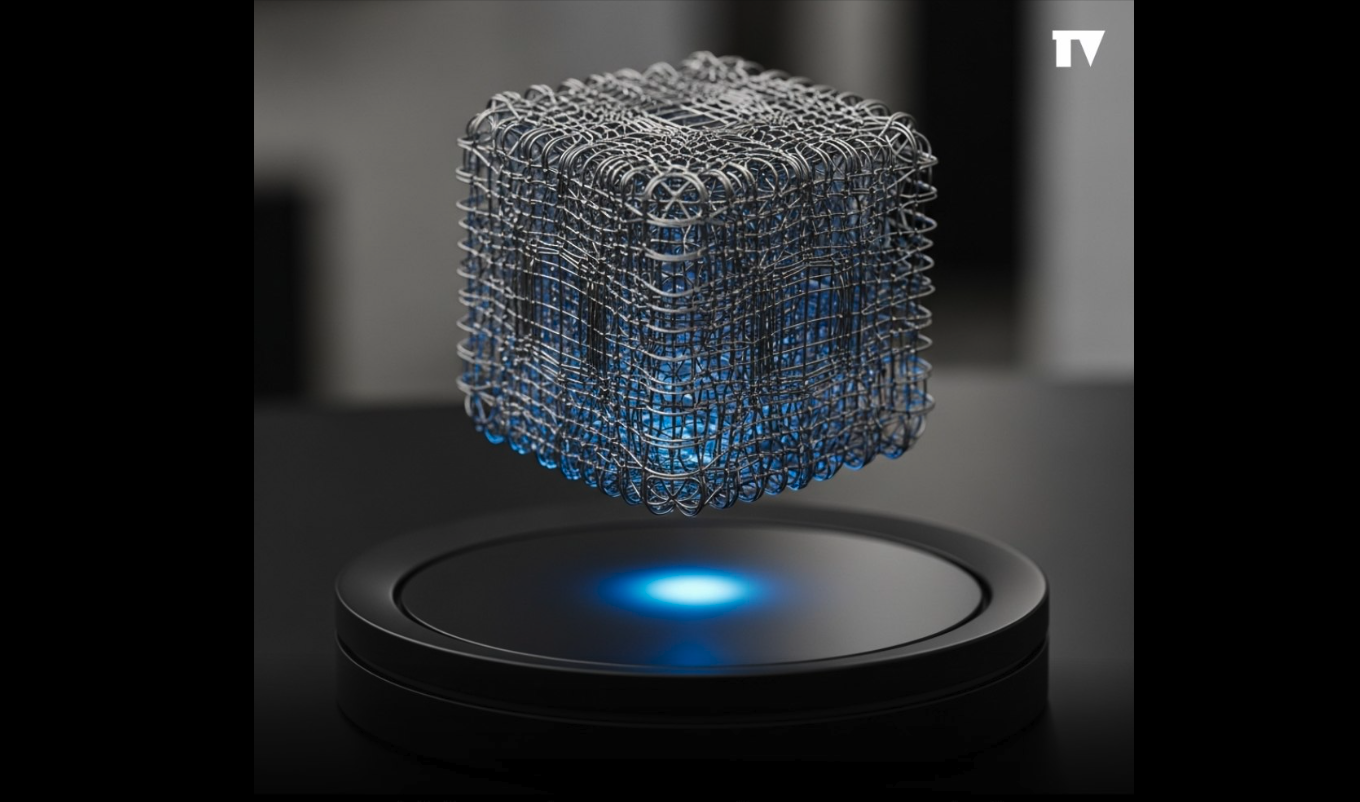

Here’s the shift. Instead of starting with a block of material and carving it down, engineers treat “material” as a geometry problem. They ask: which internal architecture, at the nanoscale, distributes stress perfectly and wastes nothing? A multi-objective Bayesian optimizer takes that question and explores millions of beam shapes and node topologies, not by brute force, but by learning—predicting which designs will push the boundary between stiffness, strength, and density, then sampling there. The winning blueprints aren’t intuitive to the human eye; they often thicken near nodes, slenderize in mid-spans, and curve in ways that neutralize stress concentrations. The result is a lattice whose failure mode is no longer “crack at the joint,” but “share the load everywhere.”

Design is only half the story; manufacturing is the magic trick. Two-photon polymerization “writes” these nanolattices directly into a photosensitive resin with voxels a few hundred nanometers wide. Then pyrolysis converts the polymer into glassy, sp²-rich carbon while shrinking the whole structure to 20% of its printed size, locking in a gradient atomic architecture with a stiffer, cleaner outer shell. Shrink the struts to ~300 nm, and size effects kick in—fewer defects, more aromatic bonding, higher specific strength. Print millions of unit cells in parallel with multi-focus beams and suddenly these once-microscopic sculptures scale to millimeters and, eventually, to parts you can hold—light enough to balance on a soap bubble, strong enough to support more than a million times their weight.

On an engineer’s Ashby chart, these AI-born lattices occupy an uncharted quadrant: densities of ~125–215 kg/m³ with compressive strengths in the 180–360 MPa band—steel-like strength at foam-like weight. In head-to-head tests at equal density, the Bayesian-optimized geometries delivered up to 118% higher strength and 68% higher stiffness than standard lattices. That’s not incremental; that’s a new slope on the performance frontier.

Now, translate those numbers into outcomes. Aircraft airframes and interior structures shed kilograms, extending range or payload without compromising safety. Electric vehicles swap heavy metal reinforcements for nanoarchitected crash members that absorb energy more efficiently, opening room for batteries or reducing pack mass outright. Satellites launch lighter and survive harsher vibrational environments. Surgical implants gain strength without bulk, improving osseointegration and comfort. Consumer gear—helmets, racquets, bikes—gets faster, safer, and quieter in a single move. Even wind turbine blades and robotics arms can be re-imagined with internal lattices tuned, cell by cell, for local loads.

But the deeper revolution is organizational. Materials science is becoming a software discipline. Instead of discovering one miraculous alloy every decade, teams will iterate architectures weekly, pushing new designs through automated loops: simulate → print → pyrolyze → test → refit the model. Property targets stop being wish lists and start being prompts: “maximize specific strength at density < 200 kg/m³, under combined compression and shear, while preserving manufacturability.” The optimizer does the rest, proposing structures no human would sketch and proving them on the bench. The bottleneck shifts from serendipity to throughput.

Skeptics will point to scale, cost, and durability. Fair. Two-photon systems are fast but not yet factory-fast; pyrolysis can warp large parts; stitching fields of view can introduce defects. All solvable. Parallelization of laser foci is already three orders of magnitude faster than first-gen tools; process control during pyrolysis is improving; hybrid approaches—print high-value lattice cores and overmold them—can bridge until full-scale direct printing matures. Meanwhile, the physics is on the side of the architects: as features shrink, flaws diminish, interfaces strengthen, and performance climbs.

The most provocative question isn’t whether these materials will escape the lab. It’s what happens when every product team can “dial-a-material” for each part: one lattice for torsional stiffness under thermal cycling, another for blast resistance at ultralow weight, a third tuned for acoustic damping and heat spread. Supply chains shift from stock shapes and commodity alloys to digital inventories of printable metamaterials. Design reviews add a new slide: the material genome of the part, version-controlled like code. In five years, we’ll stop asking whether AI can design; we’ll ask whether we should ever design without it.

A century ago, steel and aluminum defined what we could build. Today, generative nanolattices define what we can imagine. The next airliner wing will not be “made of carbon.” It will be made of a billion decisions—each a curve in a strut, each learned by an algorithm, each printed by light—woven into a featherweight structure that turns gravity into a suggestion.

Read more on related breakthroughs:

- AI accelerates the discovery of high-performance materials

- 3D nano-architected metamaterials break strength-to-weight records