By Futurist Thomas Frey

The Minority Report Problem Is Already Here

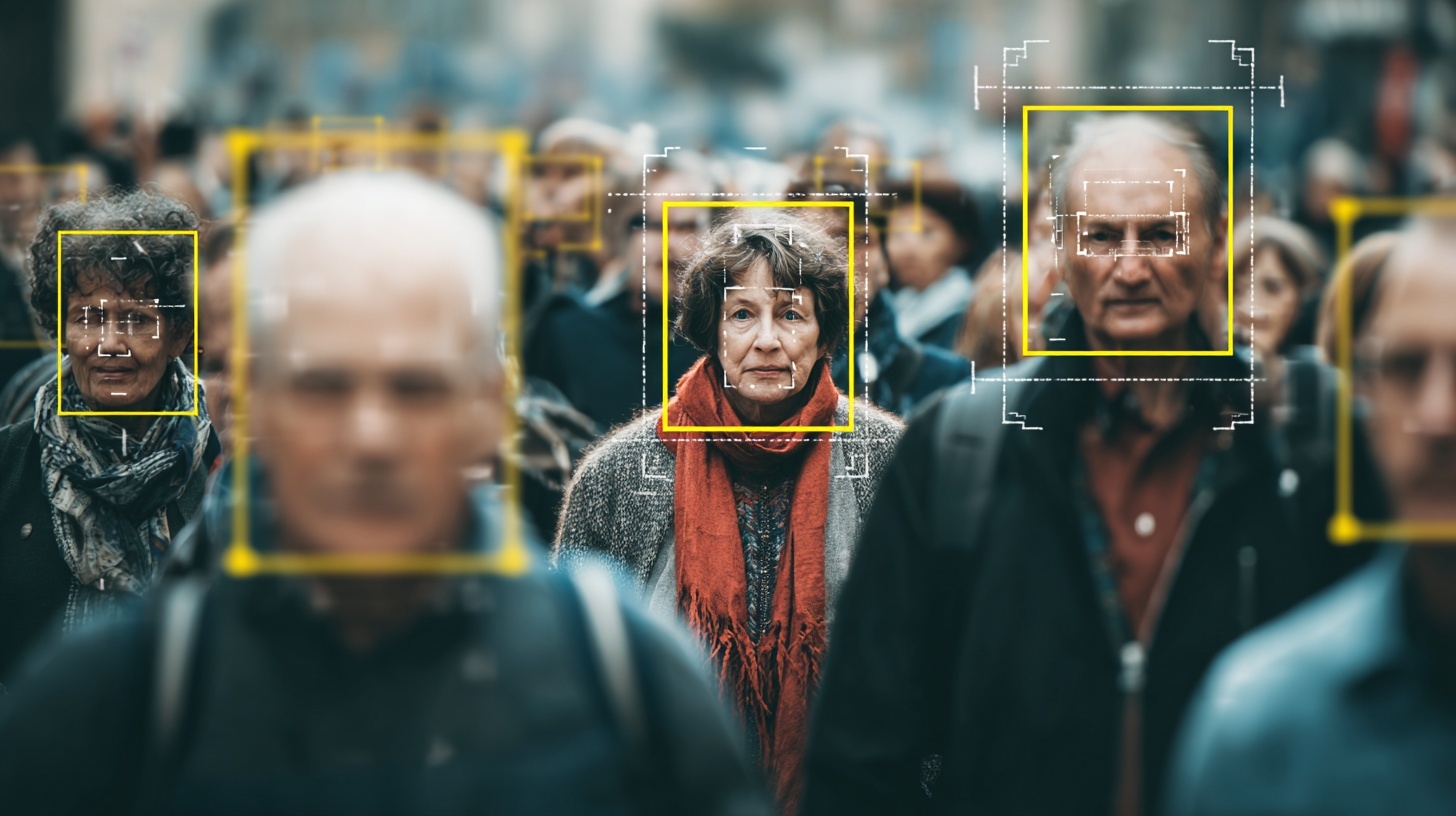

By 2032, most crimes won’t be stopped by catching perpetrators in the act—they’ll be interrupted before the act occurs because AI systems detected suspicious patterns and flagged the risk. Not science fiction. Not hypothetical. The technology exists now, and the deployment is already beginning in cities worldwide.

Sensors embedded throughout urban environments are learning to recognize motion patterns associated with criminal behavior. The way someone approaches an ATM. How they scan a parking lot. The body language preceding a mugging. Vocal stress signatures that indicate deception or violent intent. Anomaly behaviors that deviate from typical patterns in ways that correlate with criminal activity.

The AI doesn’t need to understand why these patterns predict crime—it just needs to recognize that they do. Machine learning systems trained on millions of hours of surveillance footage have become eerily good at predicting when someone is about to commit a crime, often minutes before it happens. Accuracy rates are already surpassing human intuition, and they’re improving exponentially.

The unusual part isn’t the technology—it’s the implication. We’re shifting from punishing crimes that happened to preventing crimes that might happen. From catching criminals to identifying people displaying pre-criminal patterns. From investigating acts to monitoring intentions. And nobody’s figured out the ethics of arresting someone for what they were about to do but didn’t actually do yet.

How Pattern-Based Crime Detection Actually Works

The systems operate on correlation, not causation. They don’t know you’re planning to rob someone—they recognize that your movement pattern, vocal stress indicators, and behavioral anomalies match patterns that preceded previous robberies in the training data. You’re walking slowly past storefronts at 2 AM, repeatedly glancing at door locks, checking over your shoulder, exhibiting elevated heart rate detected through remote sensors. The algorithm flags you as high-risk.

What happens next depends on the jurisdiction and implementation. In some cities, police receive alerts and increase patrols in the area. In others, the system triggers direct intervention—security approaching you, lights suddenly brightening, automated announcements that you’re being monitored. In the most aggressive implementations, police detain you for questioning based solely on pattern matching, before you’ve committed any crime.

The crime rates in pilot cities have dropped dramatically. Muggings decrease when potential perpetrators realize they’re being monitored before they act. Burglaries decline when criminal patterns trigger immediate responses. Property crime plummets when the window between criminal intent and criminal act shrinks to seconds.

The system works. That’s the problem.

The Ethics Explosion Nobody’s Ready For

When AI flags someone as high-risk and intervention prevents a crime that would have occurred, that looks like success. But when AI flags someone as high-risk and intervention prevents behavior that might have been innocent, that’s pre-emptive harassment of people who weren’t actually going to commit crimes. The system can’t distinguish between the two scenarios because the crime didn’t happen—either because it was successfully prevented or because it was never going to occur.

Keep in mind the patterns the AI learns reflect the biases in the training data. If police have historically over-policed certain neighborhoods, the training data will show more crimes in those areas—not because more crimes actually occur there, but because more crimes get detected and recorded there. The AI learns that people in those neighborhoods exhibiting certain patterns are high-risk, creating a feedback loop that perpetuates and amplifies existing biases.

Young men of color walking in groups trigger alerts more frequently than other demographics because the training data reflects decades of discriminatory policing. Poor people exhibiting stress behaviors get flagged as potential criminals when wealthy people exhibiting identical stress don’t, because the wealthy aren’t in the dataset the same way. The AI doesn’t see race or class—it sees patterns that correlate with race and class because that’s what the data contains.

The Impossible Questions We’re Avoiding

Is it better to prevent crimes before they happen, even if that means harassing innocent people whose behavior happens to match criminal patterns? Or is it better to let crimes occur so we only punish actual criminals rather than people displaying pre-criminal indicators? There’s no clear answer because we’re weighing actual harm prevented against hypothetical rights violations for people who might not have committed crimes anyway.

What about people who were genuinely planning crimes but changed their minds before acting? If the AI flags them based on pre-criminal patterns and police intervene, are we punishing thought crimes? Intent without action? At what point does “I was thinking about it but decided not to” become protected versus punishable?

The legal framework assumes criminal justice happens after crimes occur. We investigate, gather evidence, prove beyond reasonable doubt that someone committed a specific illegal act, then impose consequences. Pattern-based intervention inverts that entire framework—we intervene before the act, based on probabilistic assessment that someone might commit a crime, without evidence of actual criminal behavior beyond correlation with patterns.

How do you defend yourself against an algorithm that says you were exhibiting pre-criminal patterns? You can’t prove you weren’t going to commit a crime because the intervention prevented the timeline where your intentions would have become manifest. You’re guilty of looking guilty, and there’s no way to demonstrate innocence because innocence would require completing the behavior to prove it was harmless—which the system prevented.

Where This Goes That Nobody Wants to Admit

The trajectory is clear: as pattern recognition improves and deployment expands, we’re moving toward a world where criminal behavior becomes nearly impossible in monitored spaces—not because people become more ethical but because AI systems interrupt criminal acts before they occur. Crime doesn’t disappear—it gets compressed into the seconds between forming intent and acting on it, a window too small for traditional criminal behavior.

This sounds utopian until you realize what it means for privacy, autonomy, and what it means to live in a society that assumes you’re always potentially criminal and constantly monitors for patterns indicating risk. We’re not building security systems—we’re building pre-crime infrastructure that treats everyone as suspects requiring continuous assessment.

Final Thoughts

The technology for pattern-based crime detection exists and improves daily. The ethical frameworks for managing it don’t exist and won’t emerge quickly enough to guide deployment. We’re rolling out systems that interrupt criminal acts before they occur because the crime reduction statistics are compelling and the civil liberties concerns are abstract and complicated.

By the time we realize we’ve built a society where everyone is perpetually monitored for pre-criminal patterns, where being flagged by an algorithm can destroy your life without you ever committing a crime, where the gap between criminal intent and criminal punishment has compressed to nothing—by then, the infrastructure will be too embedded to remove without making cities feel dangerously unprotected.

After all, once you’ve experienced a world where AI prevents crimes before they happen, going back to a world where crimes actually occur feels unacceptably risky. Even if prevention requires treating everyone as potential criminals awaiting only the right pattern match to confirm the suspicion.

Related Articles:

When Your House Becomes Your Therapist: The Emotional Architecture of 2035

The Great Fracturing: How AI Is Systematically Splitting Society Into Incompatible Realities

The Real AI Revolution Isn’t Happening in the Cloud—It’s Happening Right in Front of You